Issue Ebook

cover story

Current Issue

lssue 10, 2025

Editors-in-Chief

Yunhe Pan, Aiguo Fei

Advisor Xicheng Lu

ISSN 2095-9184

CN 33-1389/TP

IF (2024 JCR) 2.9

| Submit Now |

- Current Issue

- Cover Articles

- Archive

- Virtual Issues

- Online First

Volume 26 Issue 10,2025

Special Feature on Theories and Applications of Financial Large Models

Theories and applications of financial large models AI Introduction

“In the field of xxx, expert xx has made significant progress. They established the xx system/explored the xx topic/verified the xx conjecture, offering solutions to tackle xx problems and paving the way for future research in xx.”Special Feature on Theories and Applications of Financial Large Models

Large investment model Enhanced Publication AI Introduction

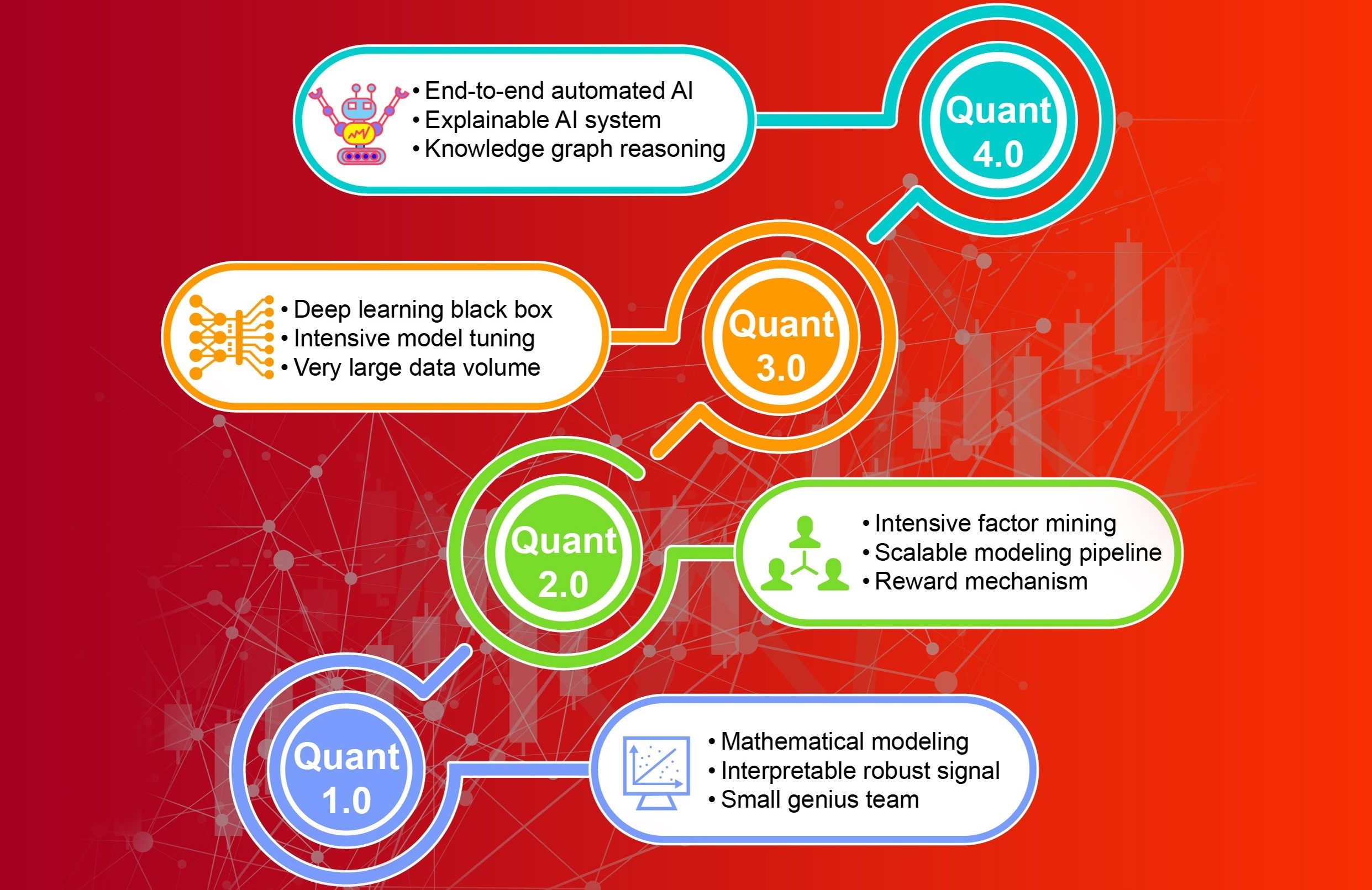

“In the field of quantitative investment, the large investment model (LIM) has been introduced to address the challenges of diminishing returns and increasing labor and time costs. Expert xx established the LIM system, which employs end-to-end learning and universal modeling to create an upstream foundation model capable of autonomously learning comprehensive signal patterns from diverse financial data. These "global patterns" are then transferred to downstream strategy modeling, optimizing performance for specific tasks. This provides solutions to enhance both performance and efficiency at scale in quantitative investment research.”Abstract:Traditional quantitative investment research is encountering diminishing returns alongside rising labor and time costs. To overcome these challenges, we introduce the large investment model (LIM), a novel research paradigm designed to enhance both performance and efficiency at scale. LIM employs end-to-end learning and universal modeling to create an upstream foundation model, which is capable of autonomously learning comprehensive signal patterns from diverse financial data spanning multiple exchanges, instruments, and frequencies. These "global patterns" are subsequently transferred to downstream strategy modeling, optimizing performance for specific tasks. We detail the system architecture design of LIM, address the technical challenges inherent in this approach, and outline potential directions for future research.Keywords:Artificial general intelligence;End-to-end;Large investment model;Quantitative investment;Foundation model;Multimodal large language model130|59|0Updated:2025-11-18Special Feature on Theories and Applications of Financial Large Models

Knowledge distillation for financial large language models: a systematic review of strategies, applications, and evaluation Enhanced Publication AI Introduction

“In the field of financial large language models, a comprehensive survey explores the interaction between knowledge distillation and FinLLMs. Expert researchers established a structured taxonomy and comprehensive evaluation framework, providing a clear roadmap to accelerate the development of distilled FinLLMs.”Abstract:Financial large language models (FinLLMs) offer immense potential for financial applications. While excessive deployment expenditures and considerable inference latency constitute major obstacles, as a prominent compression methodology, knowledge distillation (KD) offers an effective solution to these difficulties. A comprehensive survey is conducted in this work on how KD interacts with FinLLMs, covering three core aspects: strategy, application, and evaluation. At the strategy level, this review introduces a structured taxonomy to comparatively analyze existing distillation pathways. At the application level, this review puts forward a logical upstream–midstream–downstream framework to systematically explain the practical value of distilled models in the financial field. At the evaluation level, to tackle the absence of standards in the financial field, this review constructs a comprehensive evaluation framework that proceeds from multiple dimensions such as financial accuracy, reasoning fidelity, and robustness. In summary, this research aims to provide a clear roadmap for this interdisciplinary field, to accelerate the development of distilled FinLLMs.Keywords:Financial large language models (FinLLMs);Knowledge distillation;Model compression;Quantitative trading96|45|0Updated:2025-11-18Special Feature on Theories and Applications of Financial Large Models

A survey on large language model-based alpha mining Enhanced Publication AI Introduction

“In the field of quantitative research, this study presents a structured review of emerging LLM-based alpha mining systems, which provides solutions to redefine the future of quantitative research. Expert analysis suggests that LLM is a scalable interface for amplifying both domain expertise and algorithmic rigor.”Abstract:Alpha mining, which refers to the systematic discovery of data-driven signals predictive of future cross-sectional returns, is a central task in quantitative research. Recent progress in large language models (LLMs) has sparked interest in LLM-based alpha mining frameworks, which offer a promising middle ground between human-guided and fully automated alpha mining approaches and deliver both speed and semantic depth. This study presents a structured review of emerging LLM-based alpha mining systems from an agentic perspective, and analyzes the functional roles of LLMs, ranging from miners and evaluators to interactive assistants. Despite early progress, key challenges remain, including simplified performance evaluation, limited numerical understanding, lack of diversity and originality, weak exploration dynamics, temporal data leakage, and black-box risks and compliance challenges. Accordingly, we outline future directions, including improving reasoning alignment, expanding to new data modalities, rethinking evaluation protocols, and integrating LLMs into more general-purpose quantitative systems. Our analysis suggests that LLM is a scalable interface for amplifying both domain expertise and algorithmic rigor, as it amplifies domain expertise by transforming qualitative hypotheses into testable factors and enhances algorithmic rigor for rapid backtesting and semantic reasoning. The result is a complementary paradigm, where intuition, automation, and language-based reasoning converge to redefine the future of quantitative research.Keywords:Alpha mining;Quantitative investment;Large language models (LLMs);LLM agents;FinTech106|57|0Updated:2025-11-18Special Feature on Theories and Applications of Financial Large Models

FinSphere: a real-time stock analysis agent with instruction-tuned large language models and domain-specific tool integration Enhanced Publication AI Introduction

“In the field of financial large language models, researchers introduce AnalyScore and Stocksis to address evaluation metrics and analytical depth issues. FinSphere, an AI agent, generates professional-grade stock analysis reports, outperforming general-purpose LLMs and domain-specific FinLLMs.”Abstract:Current financial large language models (FinLLMs) exhibit two major limitations: the absence of standardized evaluation metrics for stock analysis quality and insufficient analytical depth. We address these limitations with two contributions. First, we introduce AnalyScore, a systematic framework for evaluating the quality of stock analysis. Second, we construct Stocksis, an expert-curated dataset designed to enhance the financial analysis capabilities of large language models (LLMs). Building on Stocksis, together with a novel integration framework and quantitative tools, we develop FinSphere, an artificial intelligence (AI) agent that generates professional-grade stock analysis reports. Evaluations with AnalyScore show that FinSphere consistently surpasses general-purpose LLMs, domain-specific FinLLMs, and existing agent-based systems, even when the latter are enhanced with real-time data access and few-shot guidance. The findings highlight FinSphere's significant advantages in analytical quality and real-world applicability.Keywords:Large language model (LLM);Instruction-tuned financial LLM;Real-time stock analysis;Evaluation framework and dataset40|30|0Updated:2025-11-18Special Feature on Theories and Applications of Financial Large Models

Can large language models effectively process and execute financial trading instructions? AI Introduction

“In the financial industry, the development of large language models (LLMs) has created transformative opportunities, especially in financial trading. Expert researchers established an intelligent trade order recognition pipeline, which provides solutions to solve the problem of integrating LLMs with trading systems.”Abstract:The development of large language models (LLMs) has created transformative opportunities for the financial industry, especially in the area of financial trading. However, how to integrate LLMs with trading systems has become a challenge. To address this problem, we propose an intelligent trade order recognition pipeline that enables the conversion of trade orders into a standard format for trade execution. The system improves the ability of human traders to interact with trading platforms while addressing the problem of misinformation acquisition in trade execution. In addition, we create a trade order dataset of 500 pieces of data to simulate the real-world trading scenarios. Moreover, we design several metrics to provide a comprehensive assessment of dataset reliability and the generative power of big models in finance by using five state-of-the-art LLMs on our dataset. The results show that most models generate syntactically valid JavaScript object notation (JSON) at high rates (about 80%–99%) and initiate clarifying questions in nearly all incomplete cases (about 90%–100%). However, end-to-end accuracy remains low (about 6%–14%), and missing information is substantial (about 12%–66%). Models also tend to over-interrogate—roughly 70%–80% of follow-ups are unnecessary—raising interaction costs and potential information-exposure risk. The research also demonstrates the feasibility of integrating our pipeline with the real-world trading systems, paving the way for practical deployment of LLM-based trade automation solutions.Keywords:Large language model;Financial instruction;Evaluation;Dataset construction63|30|0Updated:2025-11-18

SEE MORE

0 1 Hybrid-augmented intelligence: collaboration and cognition

0 4 Applications of artificial intelligence in intelligent manufacturing: a review*

0 5 Challenges and opportunities: from big data to knowledge in AI 2.0

0 6 Current trends in the development of intelligent unmanned autonomous systems

0 7 Recent advances in efficient computation of deep convolutional neural networks

0 8 Terahertz time-domain spectroscopy and micro-cavity components for probing samples: a review

0 9 Cross-media analysis and reasoning: advances and directions

SEE MORE

Videos

-

00:02:51

00:02:512023 Issue 1 | Scalability and efficiency challenges for the exascale supercomputing system: practice of a parallel supporting environment on the Sunway exascale prototype system

2023-12-30Play Total: 23 -

00:02:30

00:02:302023 Issue 6 | Model division multiple access for semantic communications

2023-12-30Play Total: 13 -

00:02:15

00:02:152022 Issue 10 | Discussion on a new paradigm of endogenous security towards 6G networks

2023-12-30Play Total: 2 -

00:02:22

00:02:222022 Issue 12 | Technology trends in large-scale high-efficiency network computing

2023-12-30Play Total: 2 -

00:02:48

00:02:482022 Issue 6 | Self-deployed execution environment for high performance computing

2022-08-03Play Total: 8 -

00:02:24

00:02:242022 Issue 2 | A full-process intelligent trial system for smart court

2022-05-17Play Total: 8 -

00:02:37

00:02:372022 Issue 3 | Automatic protocol reverse engineering for industrial control systems with dynamic taint analysis

2022-05-17Play Total: 5 -

00:05:36

00:05:36P1 Speech by Academician Baoyan Duan

2022-04-17Play Total: 12 -

00:02:27

00:02:27P2 Speech by Professor Min Sheng, Xidian University

2022-04-17Play Total: 7 -

00:02:37

00:02:37P3 Speech by Professor Yunsong Li, Xidian University

2022-04-17Play Total: 11

SEE MORE

0